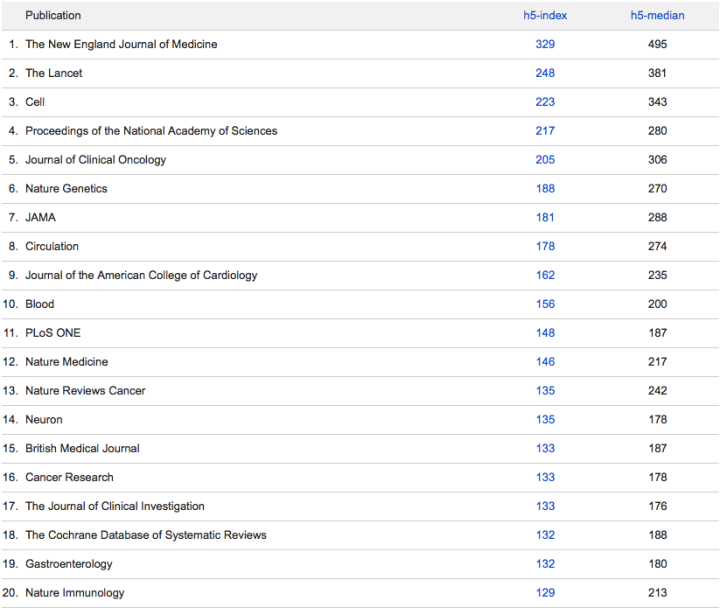

The 2014 Google Scholar Metrics have been released a week or so now. The list for the top twenty journals in Health and Medicine are: [drumroll]

Now, one thing that needs to be clarified is that the Google Scholar citation index used is the h-index (over a period of 5 years). The interesting thing is that, this is slightly different from the more famous Impact Factor. Before I discuss the pitfall of this particular index, a quick recap of what the h-index is.

The h-index (also known as the h-number) was developed by UCSD Physicist Jorge Hirsch (hence the “h”) to measure the relative importance of the work of the theoretical physicist. This index not only takes into account the number of citations that your papers have obtained, but also, the number of papers you have produced. Quoting Hirsch’s own definition of the index, we can say, that the h-index may be explained as:

A scientist has index h if h of his/her Np papers have at least h citations each, and the other (Np − h) papers have no more than h citations each.

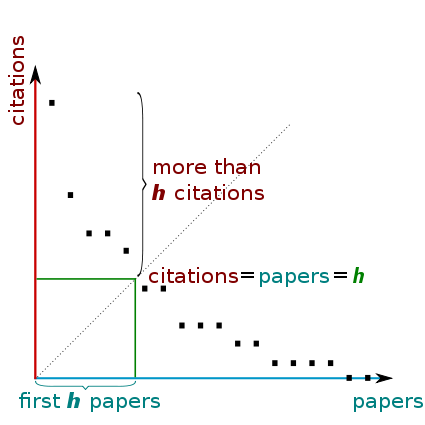

Let me explain with an example. Suppose I have published 20 papers. Let us assume five of these have no citations, seven have two citations each, another five have three citations each and the remaining three have four citations each. That means, I have eight papers that have at least three citations, and the other twelve do not have more than three citations each. However, only three of these eight papers with three or more citations will be taken into account and hence I shall have an h-index of 3. Now, the best part of h-index is that it shall increase rather quickly when one starts publishing but slow down over time. To go from an h-index of three to four, I shall need the fourth paper to get cited four times in addition to the three I already have that have been cited three times. Now if you are wondering what the h5-median is, then let me clarify that as well. Let us take, for example, The New England Journal of Medicine, which has an h5-index (h-index using the papers published in the last 5 years) of 329 with an h5-median of 495. This means that the NEJM has 329 papers, each of which has been cited at least 329 times. Not bad, eh? But, then, what is the 495 about? Well, those 329 articles, which have gone into making the h-index, have been cited a median of 495 times. More impressive!

Here is a graph (from Wikipedia) which explains this index pictorially:

The one major benefit of the h-index is that one “star paper” will not tilt the means. Suppose I have a single paper with 50 cites but nothing else I have ever written has ever been cited again, then my h-index is a measly ONE! Impact factor would have been swayed by that publication with 50 cites and would have given me a higher score. Although the h-index can be harder to game for the journals, it still does not protect against self-citation (which, however, can be easily remedied). This index means that simply publishing research work will not be good enough to raise the index value, but it would need to be good enough to gain citations as well!

One major thing that has to be kept in mind is that the h-index is supposed to compare people at the same stage in their careers. Since it increases as one advances through their career, comparing h-indices between individuals placed at different career points would be futile. Another issue is that it is an integer, which would have little discriminatory power. Thus, some alternative is needed to compare between two identical profiles with the same h-indices. Some workers have considered a rational interpolation between h and (h+1) but I am not going into all that complication now. There are several alternatives and modifications to the h-index which have been covered in the Wikipedia page, which one can check out if so inclined.

Another concern I have had is that the h-index may not be a robust tool to distinguish between two journals standing at the same index value. Let us say Journal A has 50 papers which have been cited 50 times each, and Journal B has 500 papers, of which 50 have been cited 50 times each and the rest have very few citations, if at all. Clearly, Journal B has a lower threshold of acceptance and as a publishing author one would feel it is more prestigious to get published in the Journal A. Whether such qualitative assertions about prestige holds any real meaning in terms of the academic value, one can argue, but as far as the h-index is concerned, both journals are of the same standing.

However, let me, before concluding this post, present a personal viewpoint: I sort of despise this kind of rank-mongering in academia. While I realise these issues are important tools for journals and researchers to evaluate themselves (and in comparison to other competitors appear more prestigious and desirable), I think that, somehow, dilutes the academic thrill. I know it must come as a big statement for a puny researcher like me, but to me, academics has always been about enjoyment and quality learning, rather than such rat races. As I read in some blog (cannot find it on google search right now), such “bean-counting” endeavours seem to cheapen the thrill of discovery and academic journey that the likes of Feynman held at such a premium value (who, by the way, has an overall h-index of 57, with 66,705 citations overall!).

So, to end, I wonder if the spawning of all such indices is more reflective of our need to put a price tag, an ordinal rating on everything, much like we do in a market. Unfortunately, in all this squabble to measure the impact of research in quantifiable terms, we are ending up losing the real, meaningful, long-term contributions researchers have on the society as a whole. And while we are at it, I would be failing my task if I do not mention the wonderful “antics” of Cyril Labbe, who created a fake researcher by the name of Ike Antkare, and then using SciGen (the fake paper-producing software that has entertained science bloggers for years now), produced 102 papers which boosted Ike’s h-index to a sky-high 94.

So, beware the h-index (like almost everything else out there!)!

Indeed h-index & IF are commodification of research to survive in the rat race…

LikeLike

“commodification of research”… That is quite the turn of phrase sir! 🙂

LikeLike

This

LikeLike